The 2024 Conference on Neural Information Processing Systems (Neurips)-the first conference in artificial intelligence-begins today, and the Amazon papers that were accepted there show the breadth of the company’s AI research.

Large Language Models (LLMs) and other basic models have dominated the field for the past few years, and Amazon’s papers reflect this trend that covers topics such as Recover-Augmented Generation, the use of LLMs for code generation, Commonsense Reasoning and Multimodal models. Exercise methodology also emerges as a focus area with articles on memory -efficient training, reinforcing learning with human feedback, classification with rejection and convergence speeds in transformer models.

But Amazon’s papers also demonstrate a sustained interest in topics such as bandit problems – along with a permanent component of Amazon’s Neurips post – and speech therapy, as well as recent concerns such as the use of machine learning for scientific data processing and automated reasoning. And an article, “B’Mojo: Hybrid State Space Realizations of Foundation Models With Eidetic and Fading Memory,” suggests a new paradigm for machine learning, rooted in the concept of transductive learning.

Automated reasoning

Control of Neural Model

Mirco Giacobbe, Daniel Kroening, Abhinandan Pal, Michael Tautschnig

Bandit problems

Adaptive experiments when you cannot experiment

Yao Zhao, Kwang-Sung Jun, Tanner Fiez, Lalit Jain

Online posterior sampling with prior diffusion

Branislav Kveton, Boris Oreshkin, Youngsuk Park, Aniket Deshmukh, Rui Song

Code generation

Training of LLMs to Better Self-Debug and Explaining Code

Nan Jiang, Xiaopeng Li, Shiqi Wang, Qiang Zhou, Baishakhi Ray, Varun Kumar, Xiaofei Ma, Anoop Deoras

Reason reasoning

Can language models learn to skip steps?

Tengxiao liu, qipeng guo, xiangkun hu, jiayang cheng, yue zhang, xipeng qiu, zheng zhang

Computational Fluid Dynamics

Windsorml: High-Fidelity Computational Fluid Dynamics Dataset for Automotive Aerodynamics

Neil Ashton, Jordan B. Angel, Aditya S. Ghate, Gaetan Kw Kenway, Man Long Wong, Cetin Kiris, Astrid Walle, Danielle Maddix Robinson, Gary Page

Llm assessment

SETLEXSEM Challenge: Using set operations to evaluate the lexical and semantic robustness of language models

Bardiya akhbari, manish gawali, nicholas drone

Memory management

Online weighted paging with unknown weights

Orin Levy, Aviv Rosenberg, Noam Touitou

Model architecture

B’Mojo: Hybrid Room Realizations of Founding Models With Eidetic and Fading Memory

Luca Zancato, Arjun Seshadri, Yonatan Dolls, Aditya Golatkar, Yantao Shen, Ben Bowman, Matthew Trees, Alessandro Achille, Stefano Soatto

Privacy

Training of Differentially Private Models With Limited Public Data

Zhiqi bu, xinwei zhang, sheng zha, mingyi hong

Restructuring attack on Machine Learning: Simple models are vulnerable

Martin Bertran Lopez, Shuai Tang, Michael Kearns, Jamie Morgenstern, Aaron Roth, Zhiwei Steven Wu

Retrieval-Augmented Generation (RAG)

Ragchecker: A fine mesh frame for diagnosing Recover-Augmented Generation

Dongyu Ru, Lin Qiu, Xiangkun Hu, Tianhang Zhang, Money Shi, Shuaichen Chang, Cheng Jiayang, Cunxiang Wang, Shichao Sun, Huanyu Li, Zizhao Zhang, Binjie Wang, Jiarong Jiang, Tong He, Zhiguo Wang, money , Zheng Zhang

Speech treatment

CA-SSLR: Condition-conscious self-monitored learning representation for generalized speech treatment

Yen-Ju Lu, Jing Liu, Thomas Thebaud, Laureano Moro-Velazquez, Ariya Rastrow, Najim Dehak, Jesus Villalba

Training methods

Comera: Computer and Memory Effective Exercise Via Rank-Adaptive Tensor Optimization

Zi Yang, Ziyue Liu, Samridhi Choudhary, Xinfeng XIE, CAO GAO, SIEGFRE KUNZMANN, ZHENG ZHAG

Optimal design for developing human preferences

Subhojyoti Mukherjee, Anusha Lalitha, Kousha Kalantari, Aniket Deshmukh, Ge Liu, Yifei Ma, Branislav Kveton

Rejection via learning density conditions

Alexander Soen, Hisham Husain, Philip Schulz, Vu Nguyen

Removing the gradient reduction dynamics of transformers

Bingqing Song, Boran Han, Shuai Zhang, Jie Ding, Mingyi Hong

Video

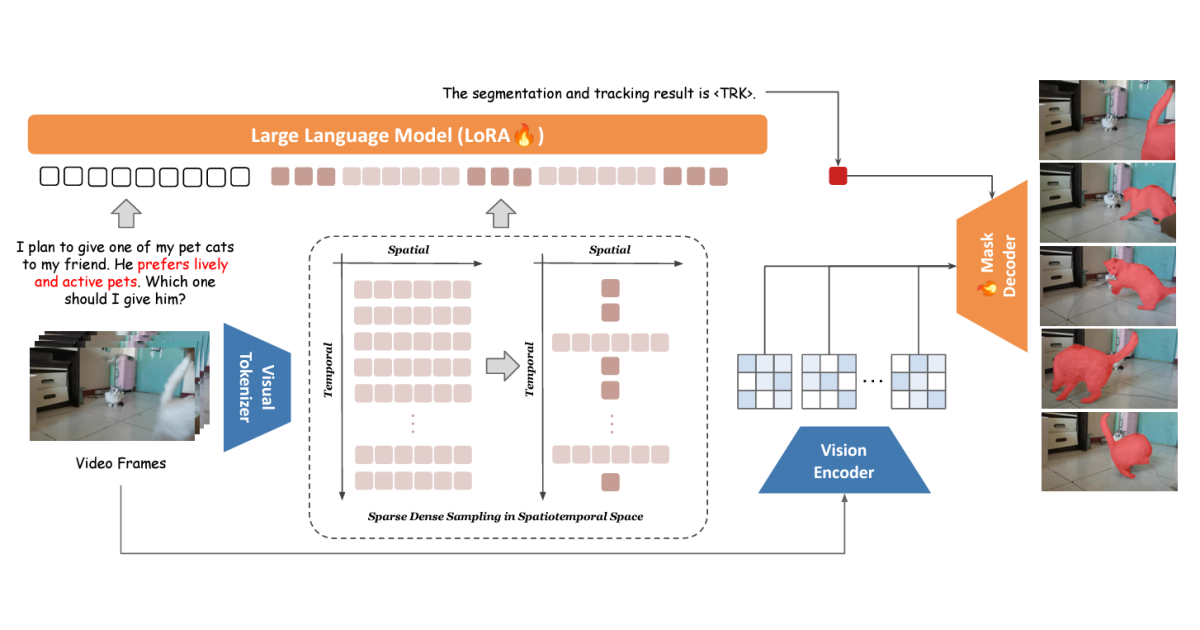

A token to segment them all: Language -In -Instruated Reasoning Segmentation In Videos

Zechen Bai, Tong He, Haiyang Mei, Pichao Wang, Ziteng Gao, Joya Chen, Lei Liu, Pichao Wang, Zheng Zhang, Mike Zheng Shou

Videotoken-Collection for Long Video Understanding

Seon Ho Lee, Jue Wang, Zhikang Zhang, David Fan, Xinyu (Arthur) Li

Vision language models

Uniform lexical representation to interpretable visual linguistic

Yifan Li, Yikai Wang, Yanwei Fu, Dongyu Ru, Zheng Zhang, Tong He