Most of today’s cutting-edge AI models are based on the transformer architecture, which is characterized by its use of an attention mechanism. In a large language model (LLM), for example, the transformer determines which words in the text string should be given special attention when generating the next word; in a vision language model, it can determine which words in an instruction to follow when calculating pixel values.

Given the increasing importance of transforming models, we naturally want to better understand their dynamics—for example, whether the training process will converge on a useful model and how quickly, or which architectural variations work best for which purposes. However, the complexity of the attention mechanism makes traditional analytical tools difficult to apply.

Last week, at the 2024 Conference on Neural Information Processing Systems (NeurIPS), we presented a new analysis of the transformation architecture. First, we identified hyperparameters and initialization conditions that provide a probabilistic guarantee of convergence to a globally optimal solution.

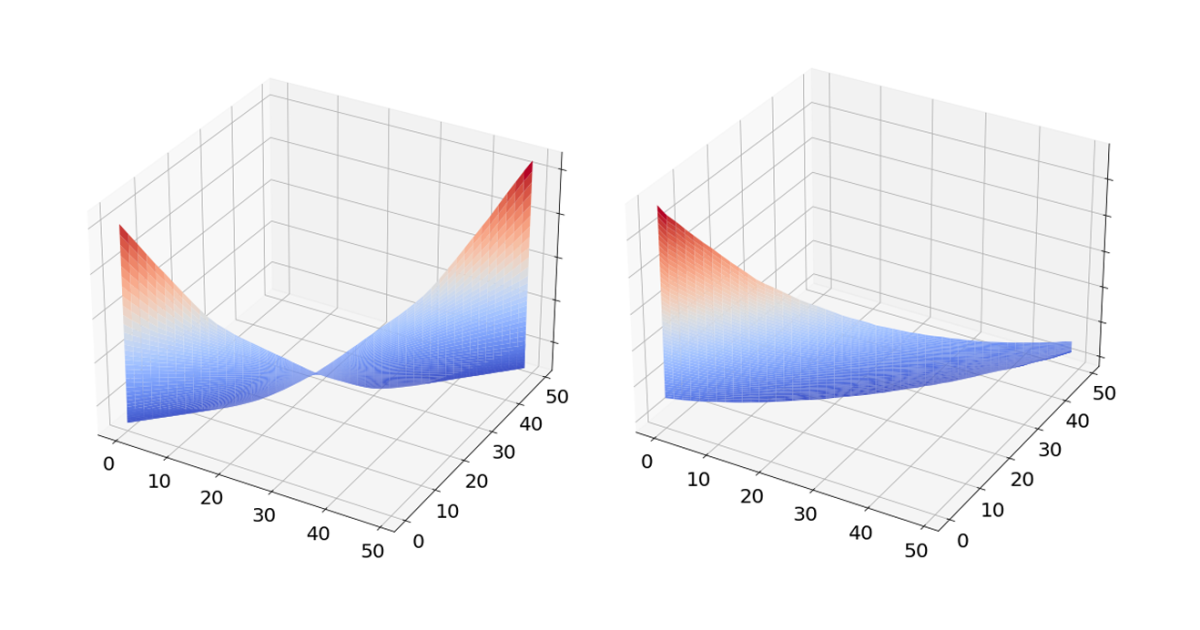

Through ablation studies, we also showed that the choice of be careful core — the function used to calculate the attention weights — affects the rate of convergence. Specifically, the Gaussian kernel will sometimes enable convergence when the more common softmax kernel will not. Finally, we conducted an empirical study showing that in some specific settings, models trained using the Gaussian kernel converged faster than models trained using the softmax kernel due to a smoother optimization landscape.

A tale of three matrices

In a transformer, the attention weight calculation involves three matrices: the query matrix, the key matrix, and the value matrix. All three are used to produce encodings of input data. In one self-attention mechanism that compares an input to itself (as in the case of LLMs), the query and key arrays are applied to the same input. In one cross attention mechanism, they are used for different inputs: in a multimedia model, for example, one matrix can be used to encode texts, while the other is used to encode images.

The attention kernel defines an operation performed on the query and key encodings; the result of the operation indicates the relevance of one set of inputs to another (or to itself). The encoding produced by the value matrix represents the semantic properties of the data. The result of the kernel operation is multiplied by the encodings produced by the value matrix, emphasizing some semantic features and de-emphasizing others. The result is essentially a recipe for the semantic content of the model’s next output.

During model training, all three matrices are typically updated together. However, we analyzed the results of updating only subsets of the matrices while keeping the others fixed. This allowed us to identify which matrices and kernel functions exert the greatest influence on the rate of convergence. The results were as follows:

- If all three matrices can be updated, normal gradient descent (GD) can achieve global optimality with either Gaussian or softmax attention kernels;

- If only the value matrix can be updated, GD is still optimal, with both kernels;

- If only the query matrix can be updated, GD convergence is guaranteed only with the Gaussian kernel.

This suggests that the commonly used softmax kernel may have drawbacks in some cases, and we performed a set of experiments that support this intuition. On two different datasets—one for a text classification task and one for an image interpretation and segmentation task—we trained pairs of transform models, one with a Gaussian kernel and one with a softmax kernel. On both tasks, the Gaussian kernel enabled faster convergence rates and higher accuracy in the resulting model.

Our analysis also indicates that, theoretically, convergence depends centrally on updates of the value matrix, since the multiplication of the value matrix and the results of the kernel operation is a linear operation, whereas the kernel operation is non-linear.

Finally, our paper also sets out a group of initialization conditions necessary to guarantee convergence. These include the requirements that the matrix of the kernel operations has full rank—that is, its columns are linearly independent—and that the ratio of the query and key matrix eigenvalues to the value matrix eigenvalue falls below a specified threshold.

Further details can be found in our paper. We hope that other members of the AI community will expand our analysis and expand our understanding of transformers as they play a larger and larger role in our everyday lives.