Teaching language models to reason consistently

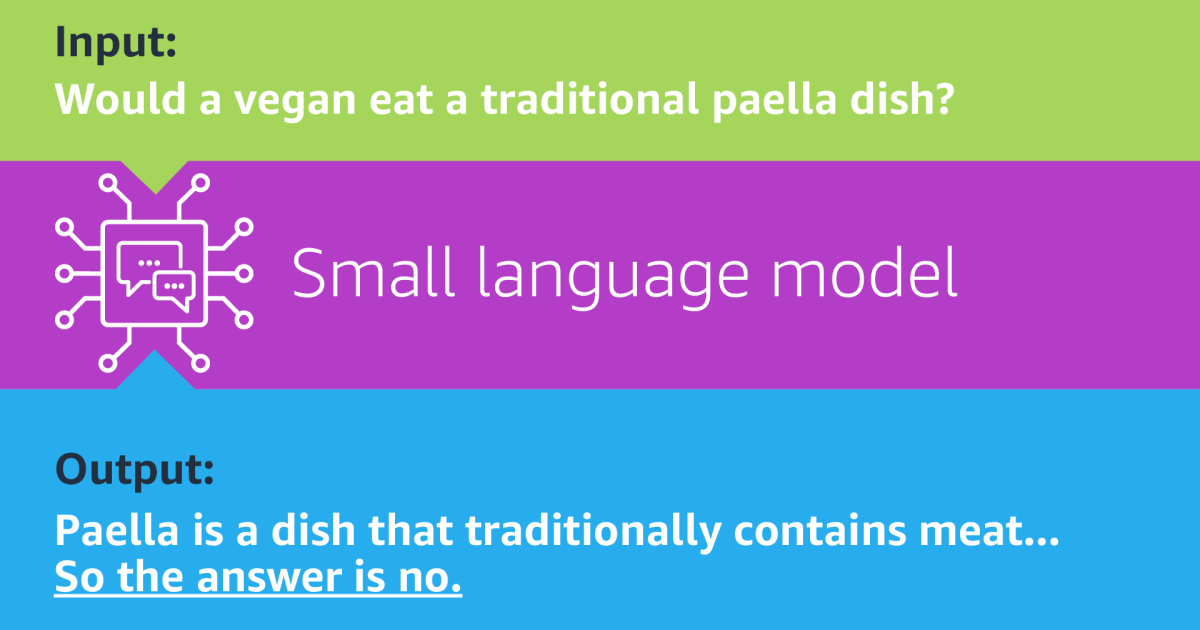

Teaching large language models (LLMS) to reason is an active research topic in natural linguistic treatment, and a popular approach to this problem is the so-called chain-of-tank paradigm, where a model is not only asked to give an end to giving rational For its answer. The structure of the type of prompt used to induce … Read more