Amazon announces new CMU candidate surveys

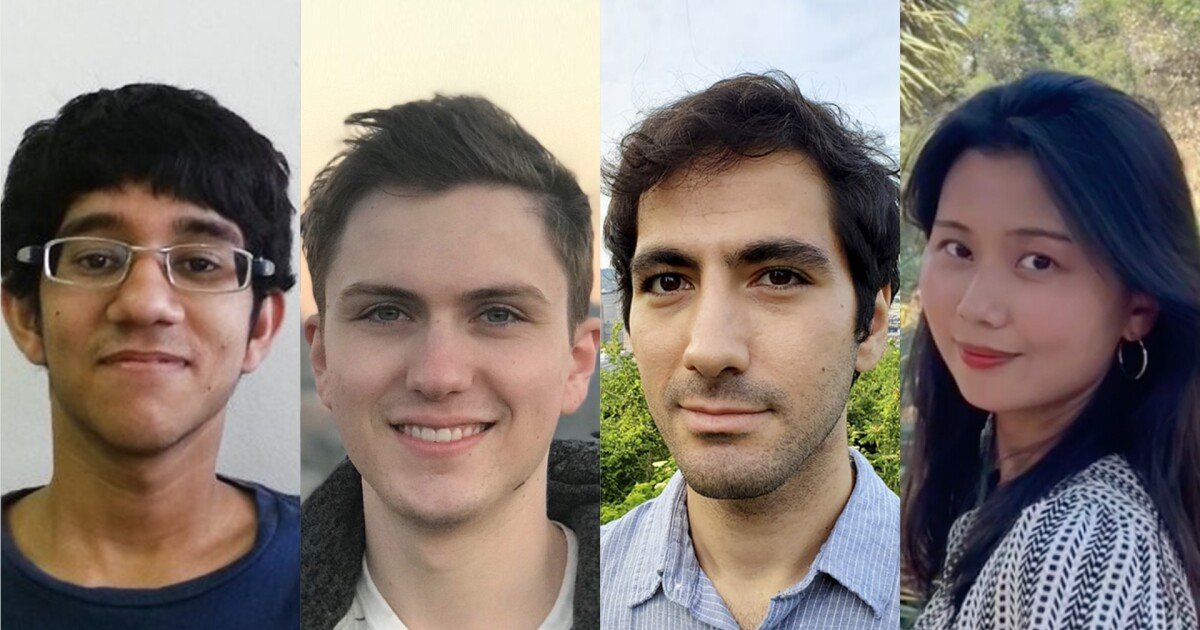

In March 2022, Amazon and Carnegie Mellon University announced the second class of Amazon Graduate Research fellows, marking an extension of the company’s efforts to help reinforce the work done by Master’s degree and PhD students. Now the next round of fellows has been announced. The program, launched in 2021, supports graduate students dealing with … Read more