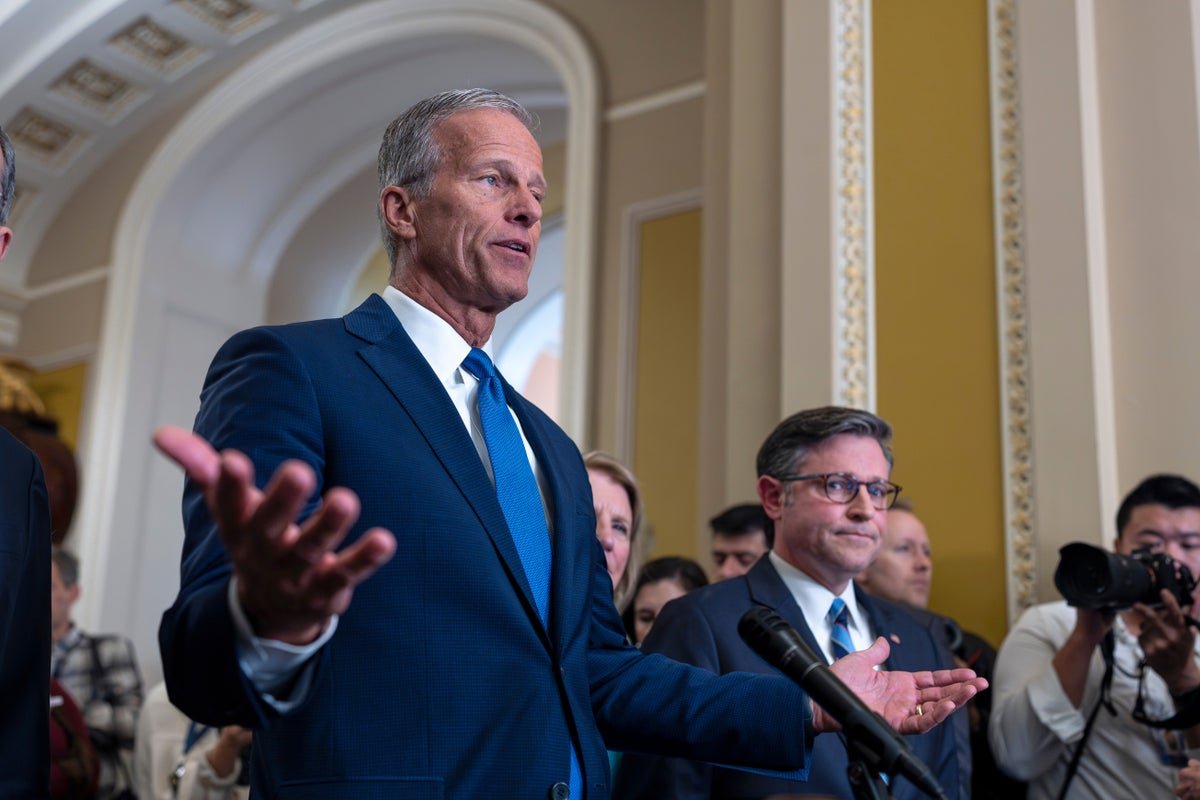

Jack Smith Suggests Public Testimony to Fight Trump Investigation ‘Mischaracterizations’

Sign up for the daily Inside Washington email for exclusive US coverage and analysis delivered to your inbox Get our free Inside Washington email Get our free Inside Washington email Jack Smith, the former special counsel who led several high-profile criminal investigations into Donald Trump, has asked congressional leaders to allow him to testify openly … Read more