Chain-nighting reasoning, where a large language model (LLM) is asked not only to perform multistep actions, but to explain its reasons for taking the steps it makes has been shown to improve LLMS ‘reasoning. A promising use of chain-of-tank (COT) Reasoning is to ensure that LLMS complies with the responsible-IA police.

Using COT to optimize an LLM for political adherence to high quality training data commented with chains of thoughts. But hiring human annotators to generate such training data is expensive and time -consuming.

Inspired by the current work of incorporating artificial experts into standard LLM training pipeline, researchers in Amazon’s artificial general intelligence organization have begun exploring the possibility of using sets of AI agents to generate high quality COT data. We report the results of our original experience in a paper we presented at this Yey meeting in Association for Computational Linguistics (ACL).

Using two different LLMs and five different data sets we compared models fine -tuned on data created through our Multi-to-division Approach to both baseline -front models and models fine -tuned through monitored fine -tuning of conventional data.

Our approaches achieve an increase in average security (in domain, outside the domain and jailbreaks) of 96% compared to baseline and 73% compared to the conventionally fine-tuned model when using a non-security-trained model (mixtral). The increases were 12% and 44%, respective, we have security -trained model (QWEN).

Multi -Agent -Consideration

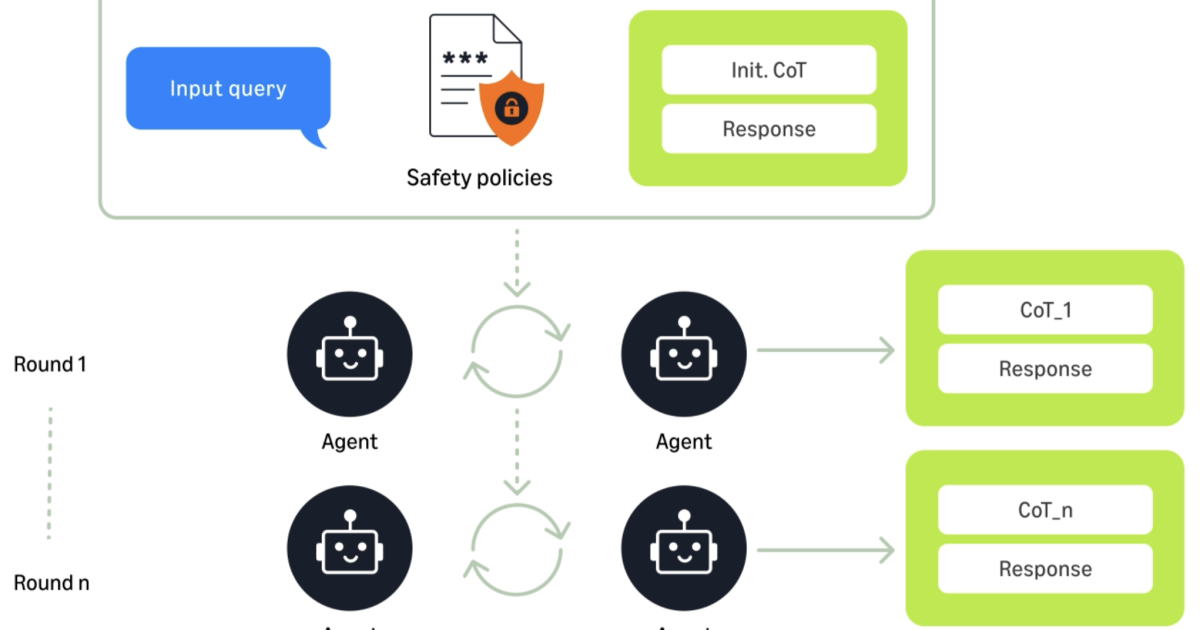

Our approaches divide the task of generating political-compressing thought chains into three phases, each using LLMs: Inteent degradation,,,,,,,, Considerationand Refinement.

DISING Inteent degradationAn LLM receives the user request and identifies explicitly and implicit user content. These along with the query are then transferred to another LLM that generates an initial cot.

Consideration is an iterative process where several LLMs (agents) expand the cot in a sequential way, taking into a defined set of police. Each agent is quick to review and correct the version of the cot it receives – or to confirm that it is good as it is. This internship ends when an agent assesses COT complete or when a predefined consideration budget is exhausted.

Finally, I. Refinement Internship, an LLM takes output from the consideration step, and after the processors to filter them to filter reduent, dotive and political inconsistent thoughts.

Assessment

After previous work, we analyze the quality of the generated cots by measuring three fine -grained attributes: (1) Relance, (2) context and (3) completeness. Each attributes is evaluated on a scale of 1 to 5, where 1 represents the lowest quality and 5 represents the highest. As a test data, we used examples from several standard COT -Benchmark -Data Sets.

We also evaluate faithfulness along three dimensions: (1) faithfulness between politics and the generated cot; (2) Faithfulness between politics and the generated response; And (3) faithfulness between the generated cot and the final response. We use an LLM fine-tuned as an auto-classing to evaluate factority on a scale of 1 to 5, with 1 minimal faithfulness indicating, and 5 complaints complete compliance.

As can be in the table below using our framework provides improvements in quality across all measurements with an improvement of more than 10% in Cots’ political faithfulness.

Average auto-classification results on the generated COT datasets (1-5 scale), including overall furious measurements to evaluate the quality of cots and faithfulness metrics to evaluate political compliance.

|

Metric |

Llm_zs |

AIDSAFE |

Delta |

|

Elevator |

4.66 |

4.68 |

0.43% |

|

Texture |

4.93 |

4.96 |

0.61% |

|

Completeness |

4.86 |

4.92 |

1.23% |

|

Cost ‘Faithfulness (Politics) |

3.85 |

4.27 |

10.91% |

|

Responses Faithfulness (Policy) |

4.85 |

4.91 |

1.24% |

|

Responses Faithfulness (COT) |

4.99 |

5 |

0.20% |

Fine tuning

We use several benchmarks to measure the performance improvements provided by our generated child bed data: BEVEL (for security), wildchat, x test (for overrefusal or nerroneously marking safe generations as uncertain), MMLU (utility) and Strongrex (for jailbreak robustness).

We used two different LLMs in our tests, the widely used open source models Qwen and Mixtral. The basic versions of these models provide a baseline and we add another baseline by fine-tuning these models with only the prompts and answers from the original data set-the generated cots. Our method shows significant improvises in relation to baseline, specifically on safety and jailbreak robustness, with some trade -offs on utility and overfusal.

Below are the results of evaluation of the monitored fine -tuned (SFT) model. “Base” denotes LLM without SFT, SFT_and denotes the model, which is sft’d on the original response data without cots, and SFT_DB denotes the model SFT’D on our generated cots and answers. (If the full table does not fit on your browser, try to scroll to the right.)

LLM: Mixtral

|

Assess |

Dimension |

Metric |

Data set |

Basis |

Sft_and |

Sft_db (bear) |

|

Security |

Safe answer |

missing |

Beaverts |

76 |

79.57 |

96 |

|

Wildchat |

31 |

33.5 |

85.95 |

|||

|

Overrefusal |

1-overrefuse |

missing |

Xstst |

98.8 |

87.6 |

91.84 |

|

Tool |

Answer |

battery |

MMLU |

35.42 |

31.38 |

34.51 |

|

Jailbreak Robustness |

Safe answer |

missing |

String injection |

51.09 |

67.01 |

94.04 |

Llm: Qwen

|

Assess |

Dimension |

Metric |

Data set |

Basis |

Sft_and |

Sft_db (bear) |

|

Security |

Safe answer |

missing |

Beaverts |

94.14 |

87.95 |

97 |

|

Wildchat |

And |

And |

And |

95.5 |

59.42 |

96.5 |

|

Overrefusal |

1-overrefuse |

missing |

Xstst |

99.2 |

98 |

93.6 |

|

Tool |

Answer |

battery |

MMLU |

75.78 |

55.73 |

60.52 |

|

Jailbreak Robustness |

Safe answer |

missing |

String injection |

72.84 |

59.48 |

95.39 |

Recognitions: We would like to recognize our Coauthors and partners, Kai-Wei Chang, Ninareh Mehrabi, Anil Ramakrishna, Xinyan Zhao, Aram Gallstyan, Richard Zemel and Rahul Gupta for their contributions.