Many machine learning (ML) involves applications that embed data in a representation room where the geometric relations between embedders have semantic content. Performing a useful task often involves picking up a embedding closest neighbors in the room: For example, the answer near an inquiry that is embedded, the image is embarking near the embedding of a text description, the text in a language near a text that is embedding in another, and so on.

A popular way to ensure that the retrieval examines, accidentally, accesses access to the intended semantics, is deep metric learning, which is often used to train contrast learning models such as the Vision-Langaage Model clip. In deep metric learning, the ML model learns to structure the representation room according to a particular metric to maximize the distinction between hidden training samples while promoting proximity among the like.

However, one disadvantage of deep metric learning (DML) is that both the distances between embedders of the same class and the distance between different classes of embeddings may vary. This is a problem in many applications in the real world where you want a single spacer that meets specific false positive and false-negative race requirements. If both the interclass and intraclas distances vary, no single threshold is optimal in all boxes. This can cause significant implementation in large applications as individual users may require different threshold sets.

At this year’s International Conference on Learning Representation (ICLR), my colleagues and I presented a way of making the distance between DML hills more consists of, so that a single threshold will benefit from fair fractions of results received.

First, we offer a new evaluation metrics to measure the DML models’ threshold consistency, called the operating-point-in-chief score (OPIS), which we use to show that the optimization of model-decuracy does not optimize threshold consistency. Then we offer a new loss period that can be added to any loss function and spine architecture for training a DML model that reguulates distances between both hard-positive intraclas and stubborn interclass holes to make spacers more. This helps to ensure existing accuracy across customers, even in the midst of opinion variations in their query data.

To test our approval, we used the ovenbenchmark image data set, and with each one we trained eight networks: Oven in the networks was the remaining network, trained with two different loss functions, each with and without our added expression; The other four were Vision Transform Networks, also trained with two different advanced DML loss features, with and without our added expression.

In the resulting 16 comparisons, the incorporation of our loss period improved remarkably threshold impact across all experiments improved, reducing OPIS -inch consistency score by up to 77.3%. The integration of our Proposted Losso led to improved access in 14 out of the 16 comparisons, with the largest improvement margin being 3.6%and the highest reduction was 0.2%.

Measurement of consistency

DML models are typically trained using contrastive learning, where the model receives peers input, which is either of the same class or of different classes. During training, the model learns an embedding scheme that pushes data from different classes apart from each other and poultry data in the same class together.

As the separation between classes increases and the separation within classes decreases, you can expect the embedders for each class to become very compact, leading to a high degree of distance consistency across classes. But we show that this is not the case, even for models with very high batteries.

Our metric evaluation, opis, nested, we have tool Result measuring a model accident at different thresholds. We use standard F1 scores, as factors in both false acceptance and false-repellent speed, where a weighting time can be added to emphasize one speed above the other.

Then we define a rage of thresholds that we call Range Calibrationwhich is typically based on the target performance metration in some way. For example, it can be selected to impose the limits of the false approval or false rejection spleen. We then calculate the average different between tool score for a given threshold choice and the average tool score over the complete range of thresholds. As it can be in the graph of utility vs. Threshold spacing, the utility threshold curve can vary Nordic for different classes of data in the same data set.

To measure the relationship between performance and threshold, we trained a number of models on the same data set using an intervals of different loss functions and batch sizes. We found that among the lower accuracy models there was actually a correction between accuracy and threshold consistency. But in addition to a bending point, improved the performance stool at the expense of less consists of thresholds.

Better threshold texture

To improve the threshold consistency, we introduce a new regularization loss for DML training, called the threshold consequences-margin (TCM) losses. TCM has two parameters. The first is a positive margin for mining of harsh positive data peers, where “hard” denotes DAT -objects in the same class with little kosinus equality (ie they are so different that it is difficult to assign them to the same class). The second is a negative margin for mining of hard negative data peers, where “hard” indicates data points for different classes with high cosinus equality (ie they are so similar that it is difficult to assign them to different classes).

After mining of these hard comrades, the loss expression imposed a penalty that is proportional to the difference between the measured distance and the parameter of the hard peirs exclusively. Like the calibration area, these values can be designed to enforce limits of false violation of fake-repellent rots even because of distribution operation between training and test sets recommend that they be set to the data.

In other words, our TCM loss period serves as a “local inspector” by selective adjustment of hard samples to prevent overallness and excessive compactness near the boundaries between classes. Tab function for a trained without it, our regulatory expression improves the consistency of threshold distances across data classes.

Below are the results of our experiment on four benchmark data sets using two models for each and two versions of two loss functions for each model:

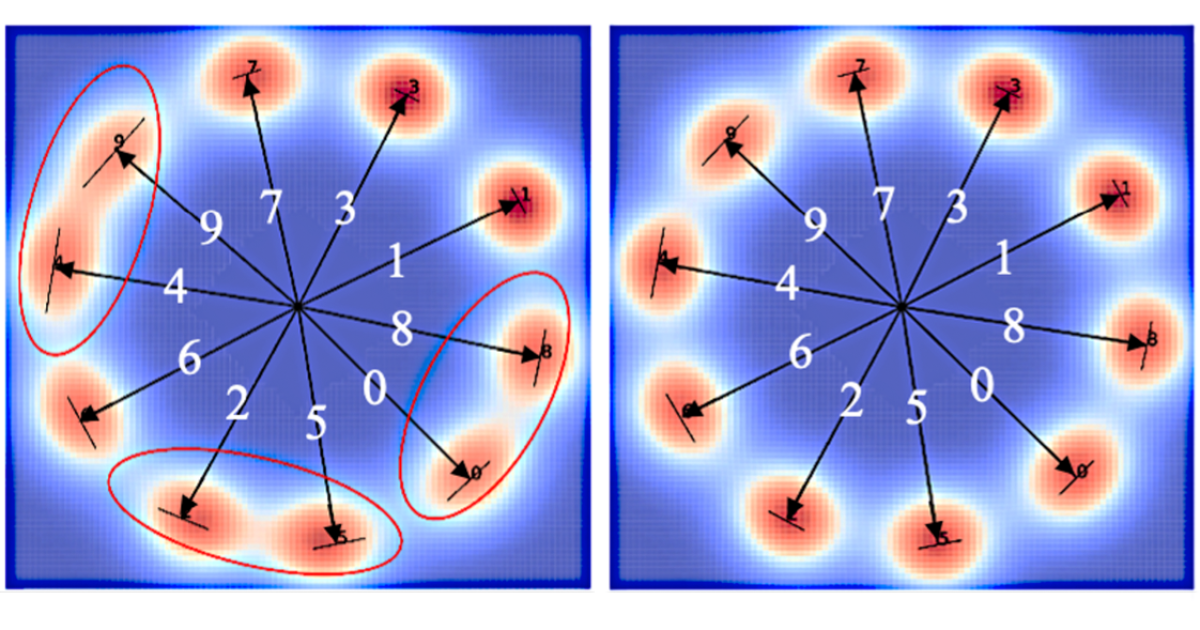

We also conducted a toy experiment using the MNIST data set with hand-drawn digits to visualize the effect of our suggest TCM regulation, where the task was to learn how to group the example of the same digit together. The addition of our loss period led to more compact class clusters and clearer separation between clusters, as it may be in the visualization below:

The addition of our TCM loss period may not lead to dramatic improvisations in all cases. But by becoming it can be used, without extra calculation costs, with any choice of model and any choice of loss function, the apartments are rare when it would not be worth a try.