Large language models for code are models that are prior to source code rather than natural-language texts. They reintroduce well to complete the code for any program features based solely on context. However, they are struggling with new, large software development projects where correct code ending can depend on API calls or features defined otherwise in the code strike.

Fetch-Augmented Generation (RAG) addresses this problem by retrieving the Linde context from the restoria, enriching the model’s understanding and improving its output. But performing retrieval takes time and brakes generation: Is it always the best choice?

In a paper we presented at this year’s International Conference on Machine Learning (ICML), we examined this question and found that 80% of the time does not actually improve the quality of the code generation.

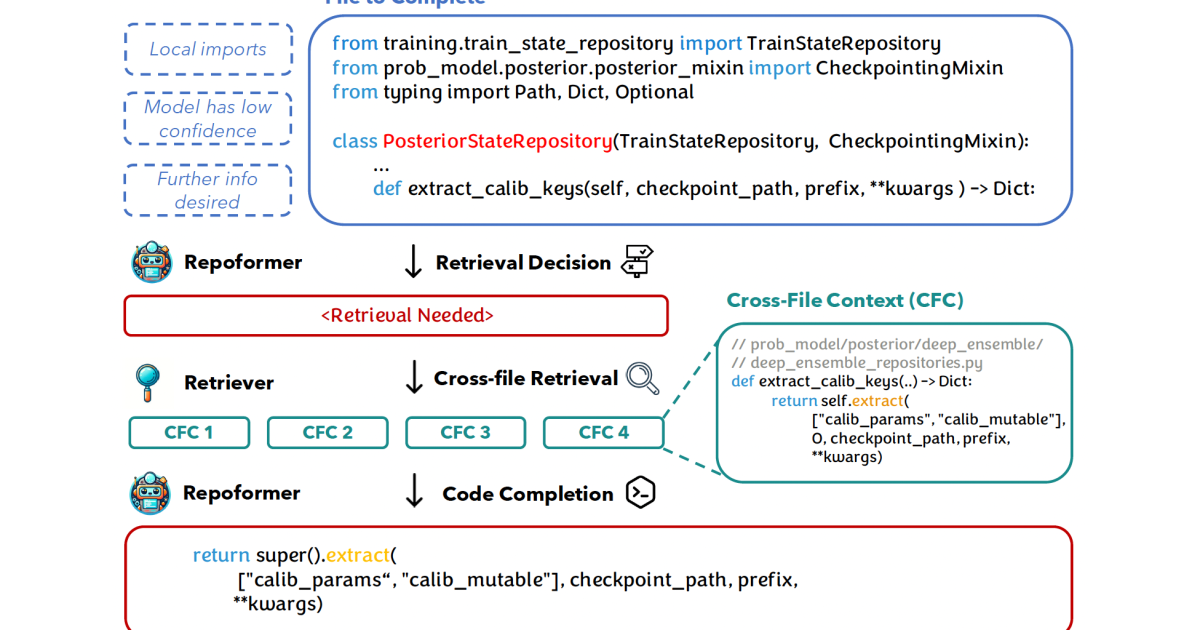

To tackle this in -effect sectorcy, we fine -tuned an LLM to determine that or not retrieval is likely to help and emit one of two special tokens, depending on the answer.

For fine-tuning, we used a dataset built by sampling code from Open License Restitations, Randomly Masked Lines in the Code and retrieved Related Code from elsewhere in the Refermentation. Then we compared an LLM’s reconstructions of the masked code both with and without the extra context. The examples are then felt according to it or not obtained improved generation.

In the experiment, we found that a code LLM on our data set on code code completion tasks that were fine-tuned on our data set performed even better than a model that always performed retrieval-but accelerated inference by 70% due to selective retrieval. In the paper, we also extend extensive experiments intended to demonstrate that our approval generalizes well to different models and different code blocks.

Method

All steps to create our data set sampling and masking code, retrieve related code and code generation with and without picked up context can sizes.

We experienced with several methods of retrieving contextual information from the refund, included Unixcoder, which used transformative semantic embedders to match cod sequences, and Codebleu using N-Gram data, synta trees and code flow semantics. However, Neith exceeded the much more effective Jaccard, which is the relationship between two symbolic sequences’ cross and their association. So for most of our experience we used Jaccard -Return. We assume that we can achieve better performance with semantic retrieval that uses structural-ware chunking rather than fixed chunking lines. We leave this as future work.

For model fine-tuning, we used the mechanism “Fill-in-the-Dedle”, where the masked code is carved from the code sequence and the previous and successful sections are identified with special tokens. The educational target consists of the input string with the masked code called at the end of the string, again identified with special tokens. This allows the model to make use of the contextual information both before and after the masked code; It has been found to give better results than to train the model to insert the generated code between the previous and subsequent sections.

During fine tuning, we have two training goals: Proper reconstruction of the missing code and accurate assessment of when to get information will help the reconstruction.

Evaluation of accuracy

Compared to existing models like Starcoder, calling our method-as we call reproformmer-enhanced accidents and reduces inference slatens across different benchmarks, includes repoval and crosscodeEval, a new benchmark targeted at long-shaped completion code.

Evaluation of Latens

We illustrate the ability of reproforms to reduce latency in a realistic “online serving” setting. We assume that the working restory is already indexed. Given a request for a code ending containing the current file processes the system three processes at the same time:

- Make a retrieval decision using repoorms;

- Use a code LMM to generate the code ending without cross-file context;

- Download the crossfile context and use it to generate the code ending.

Across a variety of fixed selection limits, the selective retrieval of the repoorming is able to improve both the accident and the inference speed. The performance also applies with a wide range of threshold settings.

More interesting, reproforming is capable of acting as a plug-paste-play policy model, reducing the inference delay for various strong codes LLMs as the generational model in RAG.

With over 85% accuracy in the decision -making of retrieval, reppoforming ensures that context picking is used only when it adds value.

Further analyzes show that the proposed strategy improves reproformmer’s robustness for retrieval, with fewer harmful retrieval and more cases improved by retrieval.

Recognitions

We are incredibly grateful to Wasi Udin Ahmad and Dejiao Zhang their contribution as mentors to this project. Their guidance, from formulating the project to all their major suggestions for regular meetings, made a big difference. We would also like to thank the other Coauthor’s and anonymous ICML correction readers for their valuable feedback, which really helped improve and refine the work.