Today in Arlington, Virginia, at Amazon’s new HQ2, Amazon Senior Vice President Dave Limp hosted an event in which the devices and service organization rolled its new series of products and services. In part of the presentation, Limp, along with Rohit Prasad, was an Amazon Senior Vice President and Head Scientist for Artificial General Intelligence, showing a number of innovations from the Alexa team.

Prasad’s most important advertising was the release of the new Alexa Large Language Model (LLM), a larger and more generalized model that has been optimized for voice applications. This model can talk to customers on any topic; It has been fine-tuned to connect to make the right API calls so that it turns on the right lights and adds the temperature in the right rooms; It is capable of proactive, inference-based personalization so that it can highlight calendar events, recently played music or even recipe recommendations based on the customer’s Rocery purchase; It has several mechanisms from nowledge-aging, to make its bills, which are overall more claims; And it has protective frames in place to protect the customer’s privacy.

During the presentation, Prasad discussed several other upgrades to Alexas Conversational-IA models, designed to make interactions with Alexa more natural. One is a new way to invoke Alexa by simply looking at the screen of a camera-activated Alexa device, eliminated the need to say Wake Word on each turn: Visual Processing on a device is combined with acoustic models to decide that a customer speaks to Alexa otherwise.

Alexa has also had its Automatic-Tale Recognition (ASR) system overhaul-Inclusive machine learning models, algorithms and hardware and it moves to a new large text-to-speech model based on the LLM architecture and trained on thousands of hours of multi-spare, multi-accent and based on Multi-Speaking-style audio data.

Finally revealed Prasad Alexa’s new Speech-to-speech Model, an LLM-based model that produces output speech directly from input speech. With the speech-to-speech model, Alexa will exhibit human conversation associations, such as laughter, and it will be able to adapt its prosodi not only to the content of his own utterances, but to the speaker’s prosody as well, for example, answer with the speaker’s.

The ASR update goes live later this year; Both LTTs and speech-to-speech model will be implemented next year.

Speech recognition

The new Alexa ASR model is a multi-lion parameter model trained on a mixture of shorts, targeted utterances and long-shaped conversations. Education required a careful exchange of data types and training goals to ensure best in class performance on both types of interactions.

To accommodate the larger ASR model, Alexa moves from CPU-based speech treatment to hardware-accelerated treatment. Input to an ASR model is frame of data or 30-Millisecond snapshots of the frequency spectrum of the speech signal. We CPUs, frames are typically treated at a time. But it is ineffective for GPUs that have Mayry treatment cores that run parallel and need enough data to keep them all busy.

Alexa’s new ASR engine accumulates frames of input speech until it has the opportunity to secure sufficient work for all cores in the GPUs. To minimize latency, it also tracks the breaks in the speech signal, and if the duration of the break is long enough to indicate the end of the speech, it immediately sends all accumulated frames.

Batching of spoken data required for GPU treatment also enables a new speech recognition algorithm that uses dynamic lookahead to improve the ASR accident. When a streaming ASR application typically interprets an input frame, it used the previous frames as context: Information on previous frames can construct its hypotheses about the current framework in useful way. However, with batching data, the ASR model can use not only the previous frames, but also the following frames as context, providing more accurate hypotheses.

The final determination of the end of speech is made by an ASR engine End Poin. The earliest end points are all withdrawn in length. Since the emergence of end-end-talk recognition, ASR models have been trained in Audi-Text-Peers, whose texts included a special end-smo-smoon at the end of each utterance. The model then learns to emit the token as part of its ASR hypotheses, indicating the end of speech.

Alexa’s ASR engine has been updated with a new two-pass-end-point that can improve the type of mid-set breaks in more extended conversation, the other passport is performed by a Final Point’s arbitratorthat takes as input ASR model’s transcription of the current voice signal and its Coding of the signal. While the coding captures features needed for speech recognition, it also contains information that is useful for identifying acoustic and prosodic signals indicating where a user has finished talking.

End-pinting duty man is a separately trained dybling model that sends out a decision on the last framework for its input really represents the end of speech. Becuse IT factors in both semantic and acoustic data, its assessments are more accurate than those in a model that prioritizes one or the other. And because it takes ASR codes as input, it can level the final fair scale of ASR models to continue to improve the battery.

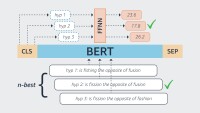

Once the new ASR model has generated a set of hypotheses about the text that corresponds to the input speech, the hypotheses go to an LLM that has been fine-tuned to redirect them to produce more accurate results.

In the event that the new, improved end points cut off the speech too soon, Alexa may still recover thanks to a model that helps repair truncated speech. Applied Scientist Marco Damonte and Angus Addlesee, a train-Intern that studied artificial intelligence at Heriot-Watt University, described this model on the Amazon Science blog after presenting a paper about it at Intheerspeak.

The model produces a graph representation of the semantic relationship between words in an input text. From the map, downnstream models can often derive the missing information; When they can, they can still often derive the semantic role of the lack of words that can help Alexa ask clarifying questions. This also makes conversation with Alexa more natural.

Great text-to-speech

Unlike previous TTS models, LTTS is an end-to-end model. It consists of a traditional text-to-text LLM and a speech synthesis model that is fine-tuned in tandem, so output from LLM is tailored to the needs of the speech synthesizer. The fine -tuning data set consists of thousands of hours of speech, versus the about 100 hours of use to train previous models.

The fine-tuned LTTS model learns to implicitly model prosody, tonality, intonation, paralinguisms and other aspects of speech, and its output is used to generate speech.

The result is the speech that combines the complete selection of emotional elements present in human communication-as curiosity when asking questions and cartoon joke delives with natural défluencies and paraling-culprit (such as UMS, AHS or Mutturing) to create natural, express and human-like speech output.

To further improve the model’s expressic, the LTTS model may be associated with another LLM fine-tuned to feel the input text of “stage guidance”, indicating how the text should be delivered. The labeled text is then transferred to the TTS model for conversion to speech.

Speech-to-speech model

The Alexa Speech-to-Tale model will utilize a proprietary prior LLM to enable end-to-end speech: Input is a coding of the customer’s speech signal and output is a coding of Alexa’s voice signal in response.

This coding is one of the keys to the procedure. It is a learned coding and it represents both semantic and acoustic functions. The speech-to-speech model uses the same coding for both input and output; The output is then decoded to produce an acoustic signal in one of Alexa’s voices. The shared “vocabulary” of input and output is what allows you to build the model on top of a charged LLM.

A trial-to-talk interaction

LLM is fine-tuned on a variety of tasks, such as speech recognition and speech-to-speech translation, to ensure its generality.

Alexa’s new capabilities will start rolling out over the next few months.