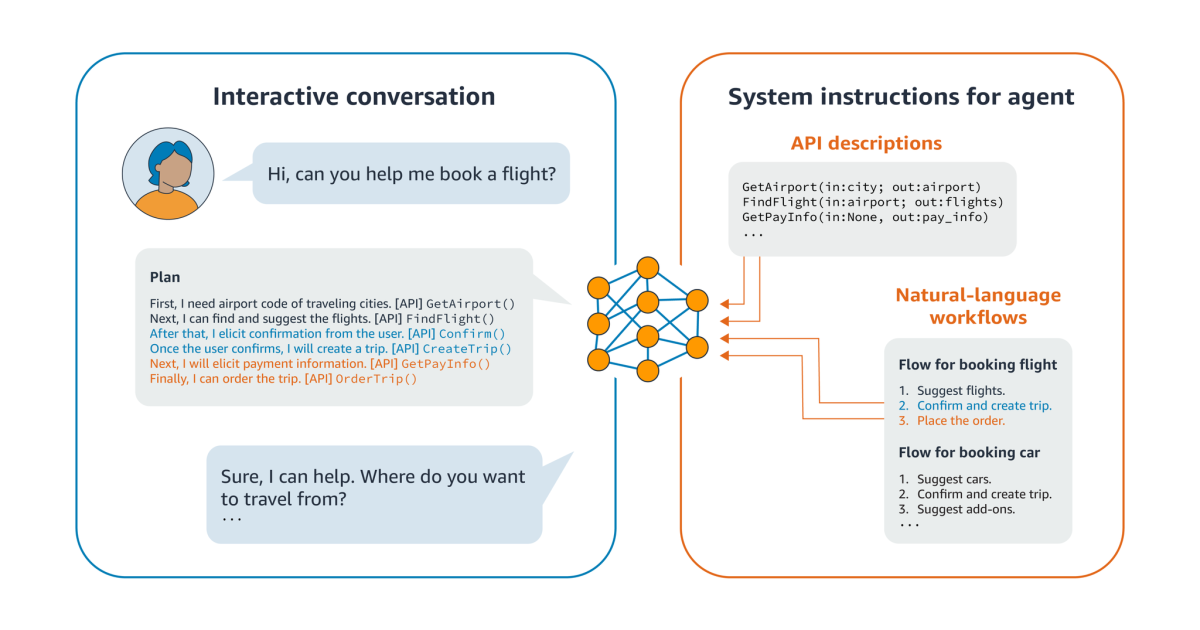

Until the recent, astonishing success of large language models (LLMS), research into dialogue-based AI systems pursued two main strikes: chatbots or agents capable of open conversation, and task-oriented dialogue models whose goal was to extract arguments for APIs and Complete tasks on behalf of the user. LLMS has enabled huge progress with the first challenge, but less on the second.

In part, this is because LLMs have difficulty following prescribed operating sequences where operational workflows or API dependents. For example, a travel agency may recommend car rental, but only after a customer has booked a flight; Similarly, the API call of searching for flight options can only occur after API calls to convert city names to airport codes.

At this year’s meeting of the North American Chapter in Association for Computational Linguistics (NAACL), we presented a new method of limiting the output from LLMS to comply with prescribed operating sequences. Fortunately, the method requires no fine-tuning of LLMS ‘parameters and can work with any LLM (with Logit access) out of the box.

We use graphs to take API and workflow dependencies and we construct a prompt that induces LLM to respond to a user request by producing a level -A sequence of API calls along with a natural-langaage rational for each. In view of a prompt we are considering k LIKELY Subsequent tokens, as predicted by llm. For each of these tokens, we Look aheadcontinues the output generation up to a fixed length. We score k Token Continuing sequences according to their semantic content and according to how well they comply with the addiction restrictions represent in the graph and eventually select only the top-scoring sequence.

Existing data sets for task-oriented dialog trend not to have many sequence of of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of- of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-of-off-dependence, so to test our approach we created Our own data set. It includes 13 different workflows and 64 API calls, and each workflow has 20 associated queries – such as e.g. Different formulated requests to book flights between different cities. In connection with each workflow, a gold standard plan is made up of API calls in order.

In experience we compared to approach to the use of the same PROMPS with the same LLMs but with different decoration Methods or methods of choosing sequences of output -tokens. Given an inquiry, the models we had to generate plans that would successfully solve it. We evaluated solutions in accordance with their deviation from the gold resolution, such as the number of out-of-sequence operations in the plan and other factors, such as API Hallucination and API Redund dance.

Our method often reduced the number of hallucinated API calls and generations outside the sequence with an order of magnitude or even two. It reduces the number of edits required to solve generated plans with the gold plan by 8% to 64%, depending on the model and hyper -parameters in the decoding methods.

We also showed that our approach activated less LLMS-in the order of seven billion parameters-to achieve the same performance as LLMS with 30-40 billion parameters. This is an encouraging result, which with an incredible commercial use of LLMs, operating efficiency and costs is becoming increasingly important.

Flap

With our approach – as we call clap, for Flow-hairDHING S.LANNING – Each inquiry to an agent is prior with a set of instructions that determine the APIEABLE API calls and the rising workflow sequences. The prompt also included example of Thoughts It explains each API call in natural language.

We experienced with instructional sets that did and did not include thoughts and found that this inclusion consisted of the quality of generations.

For each input -token, an LLM generates a list of possible successful tokens, scored as probability. All LLMs use a kind of decoding strategy to convert these lists to strings: for example, one greedy The decoder simply seizes the highest probability sequel-token; Nucleus testing Choosing only a core group of a high probability house and tries among them; and Beam decoding Spores multiple token sequences with high probability in parallel and selects the one who has the highest overall probability.

Flap suggests an alternative decoration strategy that uses a scoring system that encourages the choice of tokens that complies with API and Workflow Dependence Graphs. First for each output thoughtWe use a Semantic-Uimerte scoring model to identify the most similar step in the instructions of the instruction workflow.

Then, based on Workflow Dependency Graph, we decide where the current thought is allowed – That is, where the steps that correspond to its ancestor hubs in the graph have already been performed (prerequisites). If they have been, the idea gets a high score; If not, it receives a low score.

We score the API call in the same way – that is, based on their execution order being consistent with the addiction graph. We also used the equality scoring model to measure the similarity between the generated thought and the user request and between the tank and the generated API call.

Finallly, we score the plan of where one compliesthought,,,,,,, Call API> Syntax for each step of the plan, with the tank and the API call ahead of tags ”[thought]”And”[API]”, Respective. Based on all these measures our model chooses one of k Token sequences that are sampled from LLM’s output.

In our experience, we considered two variations on the instruction set, where all workflow restrictions for an entire domain are included in the prompt and one, where the receiving workflow is included. The base lines and the clap presentation used the same LLMs that were only different in decoding strategies: the baselines used greedy, beam search and core sampling decoders.

Across the line, on all the tested LLMs, surpassed the flap surpassed the baselines. Furthermore, we did not observe a clear runner-up among the base lines we differentiated measurements. Hénce, even in boxes where the flap offered relatively mild improvement over a particular baseline on a particular metric, it often showed much greater improvement on one of the other measurements.

The current clap implementation generates an initial plan for an input user request. But in the real world, customers often revise or expand their intentions during a dialogue. In ongoing work, we plan to explore models that can dynamically customize the initial based on a nail dialog with the customer.