At this year’s International Conference on Machine Learning (ICML), Amazon researchers have more papers on bandit problems and differential privacy, two topics of perennial interest. But they also explore a number of other topics with a mixture of theoretical analysis and practical application.

Neurral calculation adjustment

Neurral calculation adjustment Means tailoring the number of calculations done by a neural model for input, on the go. At ICML, Amazon researchers use this approach to automatic speech recognition.

LOOKAHEAD When it matters: Non-Causal Adaptive Transformers to Transducers Neural Streaming

Grant Strimel, Yi Xie, Brian King, Martin Radfar, Ariya Rastrow, Athanasios Mouchtaris

Bandit problems

Bandit problems -Whot takes their name from slot machines, or one-armed bandits-folder Explo-Exploit dilemma: An agent who interacts with the environment must at the same time maximize some reward and learn to maximize this reward. They often occur in reinforcement learning.

Delayed customization policy optimization and improved regret for conflicting MDP with delayed bandit feedback

Tal Lancewick, Aviv Rosenberg, Dmitry Sotnikov

Incentivization of investigations with linear contexts and combinatory actions

Mark Sellke

Multi-Task Off-Policy Learning from Bandit Feedback

Joey Hong, Branislav Kveton, Manzil Zaheer, Sumet Katariya, Mohammad Ghavamzadeh

Thompson sampling with generative diffusion before

Yu-Guan Hsieh, Shiva Kasivwanathan, Branislav Kveton, Patrick Bloebaum

Differential privacy

Differential privacy Is a statistical guarantee of privacy involving minimizing the likelihood that an attack can determine where given data element is or not included in a particular data set.

Differentially private optimization on large model for small costs

Zhiqi bu, yu-xiang wang, sheng zha, george karypis

Quick private core sensory estimate via locality sensitive quantity

Talk Wagner, Yonatan Naamad, Nina Mishra

Change distribution

Change distribution Is the problem that data in the real world may prove to have a different distribution than the data sets on which a machine learning model is trained. At ICML, Amazon scientists are present in a new data set that can help fight this problem.

RLSBENCH: Domain adaptation during casual label change

Saurabh Garg, Nick Erickson, James Sharpnack, Alex Smola, Sivaraman Balakrishnan, Zachary Lipton

Set methods

Set methods Combine output from several different models to reach the final conclusion. At ICML are Amazon scientists from new theoretical results about Stacked geralization Ensemble, where a higher -level model combines output of lower -level models.

Theoretical guarantee of learning ensemble strategies with applications for time series forecast

Hilaf Hasson, Danielle Maddix Robinson, Bernie Wang, Youngsuk Park, Gaurav Gupta

Explainable AI

Neural network‘Training methods Means that even their development may have no idea what is performance. At ICML exploring Amazon -scientists one Explainable AI Method called sample -based explanation that seeks to identify the training example that is most responsible for a given model output.

Represents point selection for explanation of regulated high -dimenal models

Che-Ping Tsai, Jiong Zhang, Hsiang-Fu Yu, Jyun-Yu Jiang, Eli Dog, Cho-Jui Hsieh, Pradeep Ravikumar

Extreme multilable classification

Extreme multilable classification Is the problem of classifying data when the space marks (classification categories) are huge. At ICML, Amazon researchers examine the use of side information, such as label metadata or occurrence correlation signals, to improve classification performance.

Pina: Utilization of side information in extreme multi-label classification via predicted occurrence neighborhood aggregation

Eli Dog, Jiong Zhang, Cho-Jui Hsieh, Jyun-Yu Jiang, Wei-Cheng Chang, Olgica Milenkovic, Hsiang-Fu Yu

Graph Neural Networks

Graph Neural Networks Produce vector representations of graphings that factor in information about the nodes themselves and their neighbors in the graph. At ICML, Amazon researchers examine techniques to better initialize such networks.

About the initialization of graphic neural network

Jiahang Li, Yakun Song, Xiang Song, David Paul Wipf

Hypergraph

Hair Hypergraph is a generalization of the graph structure: where an edge in an ordinary graph exactly two nodes, an edge in a hypergraf can connect several nodes. At ICML, Amazon researchers are present in new approaches to the construction of hypergrafneural networks.

From hypergrafenergi functions to hypergraf neural network

Yuxin Wang, Quan Gan, Xipeng Qiu, Xuanjing Huang, David Paul Wipf

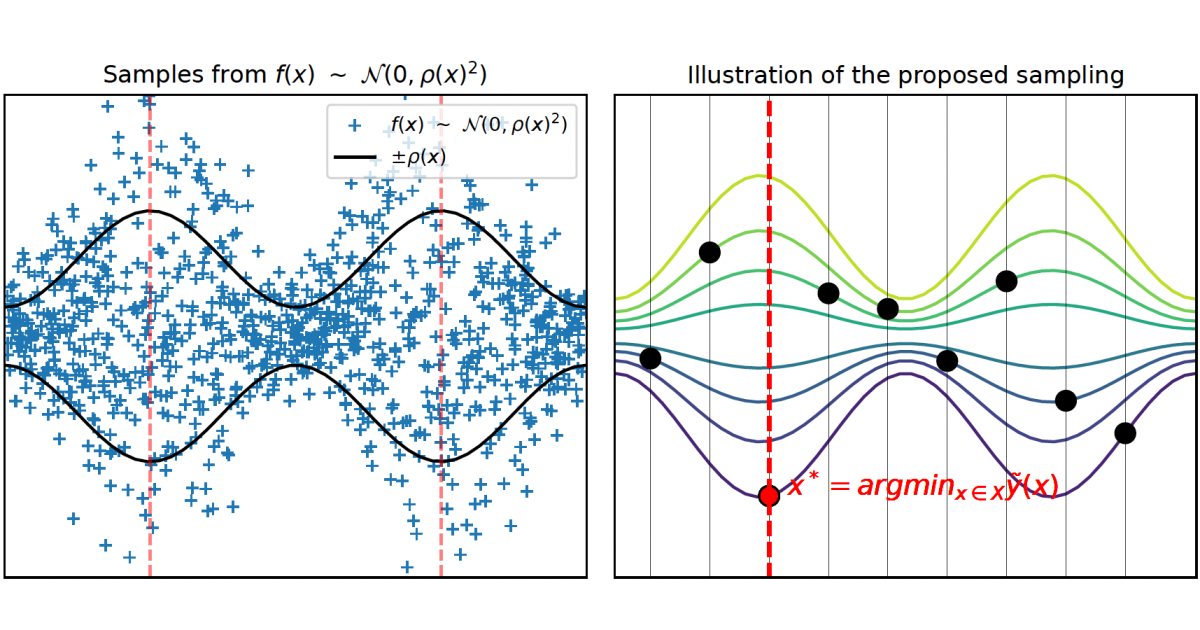

Hyperparameter optimization

Hyperparameters Are attributes for Neuel-Network models such as the learning speed and the number and width of networking, and optimization of them is a standard step in model training. At ICML, Amazon Researchers Compliance Quantile Regression offers as an alternative to the standard Gaussian processes as a way to model features bold hyperparameter optimization.

Optimization of hyperparameters with adherence to quantile regression

David Salinas, Jacek Golebiowski, Aaron Klein, Matthias Seeger, Cérric Archambeau

Independence test

Independence test is a crucial step in many statistical analyzes that love to determine where two variables are independent or not. At ICML, Amazon scientists present an approach to independence testing that adds the number of rehearsals to the difficulty of determining independence.

Sequential core of independence testing

Sasha Podkopaev, Patrick Bloebaum, Shiva Kasiviswanathan, Aadithya Ramdas

Model selection

Model selectionthat determines the specific model architecture and hyperparameter settings for a given task, typically involves a Set validation Divide from the model’s training data. At ICML, Amazon researchers offer synthetic data for validation in situations where training data is sparse.

Synthetic Data to Select Model

Alon Shoshan, Nadav Bhonker, Igor Kviatkovsky, Matan Finz, Gérard Medioni

Physical models

Deep learning methods has shown a promise of scientific computing where they can be used to predict solutions to partial differential equations (PDEs). At ICML, Amazon researchers examine the problems of adding known physics restrictions – such as compliance with conservation laws – to the predictable output of machine learning models when calculating the solution to PDEs.

To learn physical models that can respect conservation laws

Derek Hansen, Danielle Maddix Robinson, Shima Alizadeh, Gaurav Gupta, Michael Mahoney

Tabular data

Extension of the force of transformation models to Tabular data has been a burgeoning area of research in recent years. At ICML, Amazon researchers show how to improve the generalizability of models that are trained tabular data.

XTAB: The cross -table preconception for tabular transformers

Bingzhao Zhu, Xingjian Shi, Nick Erickson, Mu Li, George Karypis, Mahsa Shoaran