In the last few years, foundation models and generative-IA models-and especially large language models (LLMs)-have become an important topic for AI research. It is true even in computer vision with its increased focus on vision-language models, such as Yoke LLMS and image codes.

This shift can be seen in the blanks of the Amazon papers accepted for this year’s computer vision and pattern recognition conference (CVPR 2024). A plurality of the papers deals with Vision-Langue models, while a number of other affected related topics, such as visual question answer, hallucination restriction and recovery of retrieval-aidéd. At the same time, however, classic computer vision topics, such as 3D Reconstruction, Object Tracking and Performance, remains good representatives.

3D Reconstruction

No more ambiguity in 36030 Room Layout via Bi-Layout-Estimate

Yu-Ju Tsai, Jin-Cheng Jhang, Jingjing Zheng, Wei Wang, Albert Chen, My Sun, Cheng-Hao Kuo, Ming-Hsuan Yang

View: Multi-View Towers consists of Interpolated Denoising

Xianghui Yang, Yan Zuo, Sameera Ramasinghe, Loris Bazzani, Gil Avraham, Anton Van Den Hengel

Algorithmic information theory

Interpretable goals of conceptual equality after the complexity of auto-coding descriptive

Alessandro Achille, Greg Ver Steeg, Tian Yu Liu, Matthew Trees, Carson Klingenberg, Stefano Soatto

Geospatial analysis

Bridging remote sensors with multisensor geospatial foundation models

Boran he, Shuai Zhang, Xingjian Shi, Markus Reichstein

Mitigated hallucination

Multimodal Hallucination Control using Visual Information

Alessandro Favero, Luca Zancato, Matthew Trees, Siddharth Choudhary, Pramuditha Perera, Alessandro Achille, Ashwin Swaminathan, Stefano Soatto

Throne: an object -based hallucation benchmark for the free form generations of large vision -language models

Prannay Kaul, Zhizhong Li, Hao Yang, Yonatan Dolls, Ashwin Swaminathan, CJ Taylor, Stefano Soatto

Metric learning

Learning for transductive threshold calibration in open world recognition

Qin Zhang, Dongsheng An, Tianjun Xiao, Tong He, Qingming Tang, Ying Nian Wu, Joe Tighe, Yifan Xing, Stefano Soatto

Robustness model

GDA: Generalized diffusion to robust test time adjustment

Yun Yun Tsai, Fu-Chen Chen, Albert Chen, Junfeng Yang, Che-Chun Su, My Sun, Cheng-Hao Kuo

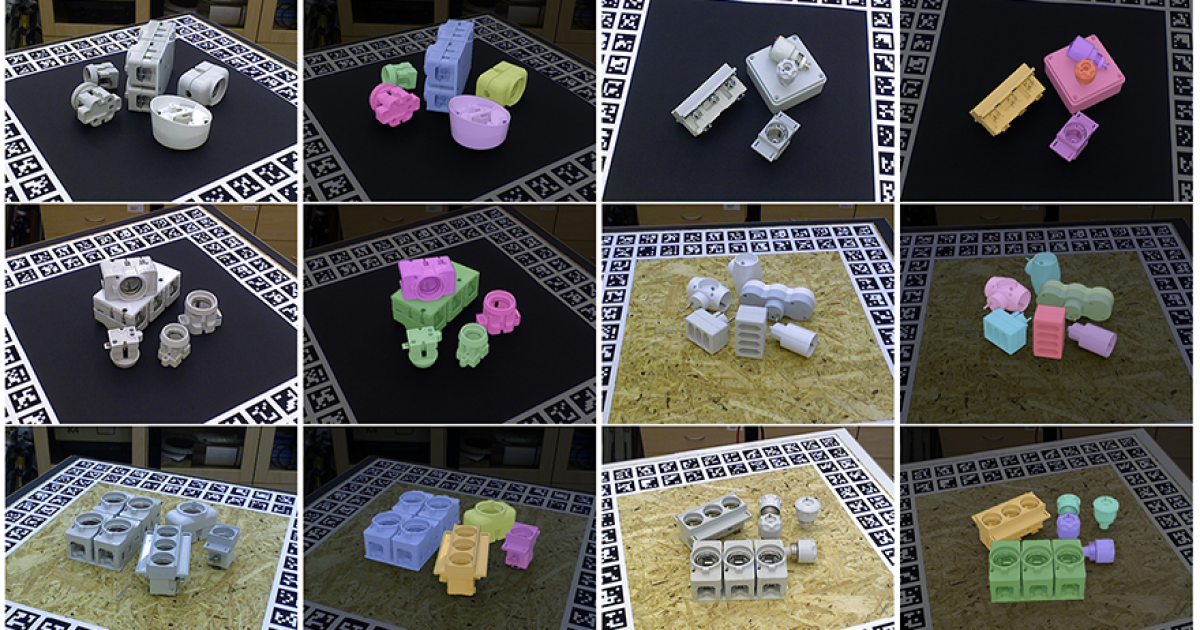

Objectcentric learning

Adaptive slot Caution: Object discovery with dynamic slot number

Ke Fan, Zechen Bai, Tianjun Xiao, Tong He, Max Horn, Yanwei Fu, Francesco Locatello, Zheng Zhang

Object tracking

Self-monitored Multi-Object Tracking with Sti-consistency

Zijia Lu, Bing Shuai, Yanbei Chen, Zhenlin Xu, Davide Modolo

Estimated

MRC network: 6-DOF Pos

Yuelong Li, Yafei Mao, Raja Bala, Sunil Hadap

AI Responsible

Fairrag: Fair Human Generation Via Fair Retrie

Robik Shrestha, Yang Zou, James Chen, Zhiheng Li, Yusheng Xie, Tiffany Deng

Performed Generated Generation

CPR: Retrieving increased generation to protect copyright

Aditya Golatkar, Alessandro Achille, Luca Zancato, Yu-Xiang Wang, Ashwin Swaminathan, Stefano Soatto

Security

Sharpness conscious optimization for real -world contradictory attacks to different computer platforms with improved transferability

Muchao Ye, Xiang Xu, Qin Zhang, Jon Wu

Video-language models

Vidla: Video Language Adjustment in Scale

Mamshad Nayeem Rizve, Fan Fei, Jayakrishnan Unnikrishnan, Son Tran, Benjamin Yao, Belinda Zeng, Mubarak Shah, Trishul Chilimbi

Vision-language models

Accept modality gap: An investigation into the hyperbolic space

Sameera Ramasinghe, Violetta Shevchenko, Gil Avraham, Ajanthan Thalaiyasingam

Improving the vision language lead tower with rich supervision

Yuan Gao, Kunyu Shi, Pengkai Zhu, Edouard Belval, Oren Nuriel, Srikar Appalaraju, Shabnam Ghadar, Vijay Mahadevan, Zhuowen Tu, Stefano Soatto

Groundhog: ground -affected language models for holistic segmentation

Yichi Zhang, Martin Ma, Xiaofeng Gao, Suhaila Shakiah, Qiaozi (QZ) Gao, Joyce Chai

Hyperbolic learning with synthetic captions for detection of open world

Fanjie Kong, Yanbei Chen, Jiarui Cai, Davide Modolo

Non-auto-gressive sequence-to-sequence-vision-Langain models

Kunyu shi, qi dong, luis goncalves, zhuowen tu, stefano soatto

About the scalibility of diffusion-based text-to-image generation

Hao Li, Yang Zou, Ying Wang, Orchid Majumder, Yusheng Xie, R. Manmatha, Ashwin Swaminathan, Zhuowen Tu, Stefano Ermon, Stefano Soatto

Visual question answer

Gram: Global Reasoning for Multi-Sider VQA

Tsachi Blau, Sharon Fogel, King Ronen, Alona Golts, Roy Ganz, Elad Ben Avraham, Aviad Aberdam, Shahar Tsiper, Ron Litman

Question AWARE VISION Transform for Multimodal Reasoning

Roy Ganz, Yair Kittenplon, Aviad Aberdam, Elad Ben Avraham, Oren Nuriel, Shai Mazor, Ron Litman

Synthesis Step by Step: Tools, Templates and LLMs as Datagenerators for Reasoning-Based Diagram VQA

Zhuowan Li, Bhavan Jasani, Money Tang, Shabnam Ghadar