Teaching large language models (LLMS) to reason is an active research topic in natural linguistic treatment, and a popular approach to this problem is the so-called chain-of-tank paradigm, where a model is not only asked to give an end to giving rational For its answer.

Given LLMS ‘tendency to hallucin (ie make false invoice claims), the generated rational can be inconcontrated with the predicted answers, making them unreliable.

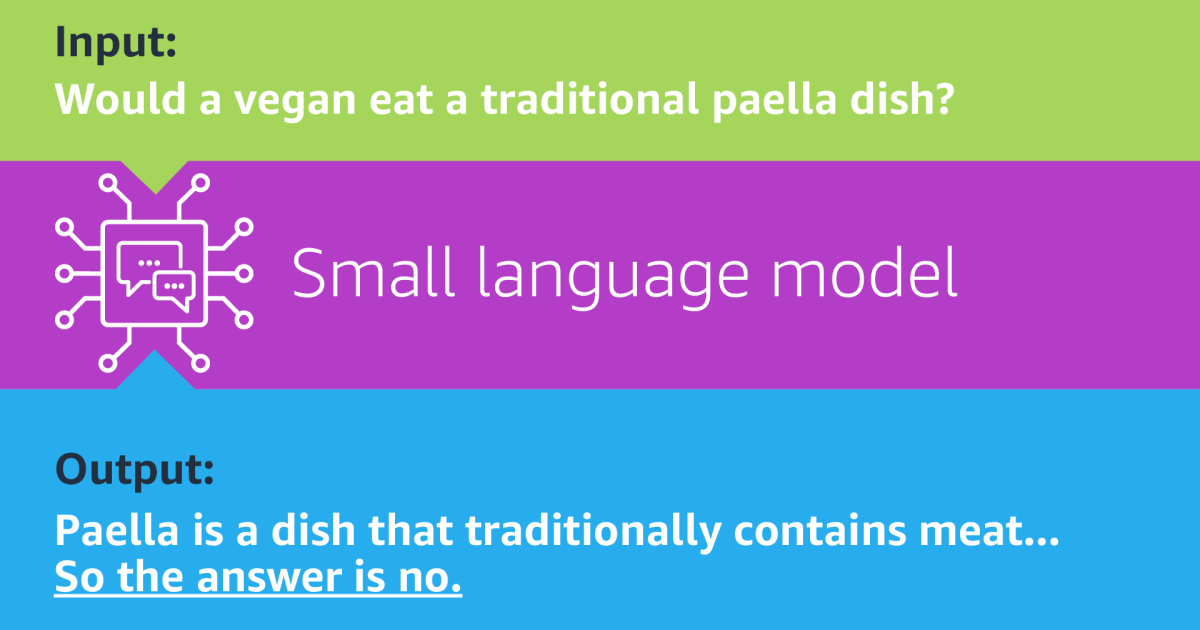

In a paper we presented at this year’s meeting of the Association for Computational Linguistics (ACL), we show how we can improve the consistency of chain-tank-reasoning through knowledgeillation: Given peirs of questions and answers from a training kit, an LLM-The “teacher” rationale for a smaller “student” model who learns to answer questions and give rationale for his answers. Our paper received one of the conference’s excellent paper prices, reserved for 39 of the 1,074 papers accepted at the hand conference.

With Knowledge Distillation (KD), we still have to satisfy ourselves with the possibility that the rationales generated by the teacher are false or unemployed. On the part of the student, the risk is that while the model may learn to produce rational, and it can learn to provide answers, it does not learn the crucial logical relationship between the two; For example, it can learn inferential shorts between questions and answers that bypass the entire reasoning process.

To limit hallucination, on the teacher’s side, we used Contrastive decodingWhich ensures that the rationale generated for real assessments are different as much as possible from the rationals generated for false claims.

To train the student’s model we used Counterfactual reasoningWhere the model is trained in both true and false rationales and must learn to give the answer that corresponds to the rational, even if it is wrong. To ensure that this does not compromise on the model performance, during training, we feel true rational “invoices” and false rational “counterfactual”.

To evaluate our model, we compared it to a chain-to-thought that was built using regular knowledge distillation on data sets for four different reasoning tasks. We asked human proofreaders to evaluate the rational generated by the teacher models. To evaluate the students’ models, we used the leaky-adjusted simulatibility metration (LAS), which measures a simulator (an external model) to predict the history’s output from the generated rationales. Across the line, our models surped better than the baselines while retaining the accuracy of the rationale tasks.

Contrastive decoding

As our teacher model we used a trained LLM whose parameters are frozen. To generate training sample for the student model, we use learning in context, where we give the teacher a handful of questions about questions, answers and human-annoted rationation and then delivers a last questwer couple. The model generates the rational for the last couple.

During training, LLMs learn the likelihood of sequences of words. At the time of the generation, they either choose the most likely word to continue a sequence or sample from the top turned words. This is the standard decoration Steps that do not guarantee that it generates rational justifies the model’s answer.

We can check the decoding process without making any adjustments to the LLM parameters. With contrasting decoding, we perform the same rational generation in rational generation twice, once with the true Amewer in the last question-answer pair and once with a disturbance.

Then when we were reaching genuine Question-Svar pair, we choose words that are not only likely to consider the true couple but relatively ImProbable in view of the false couple. In other words, we force the rational for the true couple to deviate from the rational to the false couple. In this way, we ensure that output is leaning against rational specialized to the answers in question-answering friends.

In our experience, we considered two types of disturbance of the true answers: null response where no answer was SUPPLOUGE and false answers at all. We found that contrastive decoding using false answers consistently provided better rational than contrary decoding using null answers.

Counterfactual reasoning

Previous research has shown that question-strain models will often utilize shortcuts in their training data to improvise performance. For example, replies “Who?” Questions with the first real name encourage in a source document will give the right -wing the right -hander with surprise frequency.

Similarly, a chain-to-thought model may learn how to use shortcots to answer questions and generate rational as a parallel task without learning the crucial connection between the two. The goal of educating our model of counterfactual goal is to break that shortcut.

To generate counterfactual training data, we randomly vary the answers in question-answers peirs and generate the corresponding rational, just as we did for contrastive decoding. Then we train students’ model using the questions and rational as input, and it must generate the corresponding answers.

This means that the student model may well see the same question several times during training, but with different answers (and rationales). The “billing” and “counterfactual” tags take it from being confused about its task.

In our experience, we compared the approach to someone who also used learning in context, but uses greedy decoing to produce rationally-that means a decoding method that always chooses the highest probability word. We also used two other basic lines: an LLM that directly generates rational from learning in context and a model trained on human-annoted rational.

Our study with human evaluators showed that learning in context with contrastive decoration generated more compelling rational end context Learning with greedy decoing:

|

Teacher model |

Grammarity |

New info |

Answer supports |

|

Greedy |

0.99 |

0.65 |

0.48 |

|

Contrast.-Tom |

0.97 |

0.77 |

0.58 |

|

Contrast.-fire |

0.97 |

0.82 |

0.63 |

Table: Human evaluation of data generated with greedy decoding, contrastive decoding using empty answers and contrastive decoding using incorrect answers.

In the experience using LAS -Metrically, Knowledge Claims, using reinforcing decoding, consisted of better than all three basic lines, and knowledge distillation with counterfactual reasoning and contrastive decoding consisted better than knowledgeillation with contrastive decoding alone. The model, trained in the human-annoted data set, yaielded the most-looking results on downstream tasks, but its rational did poorly. On average, our model was a little more accurate than the one trained using greedy decoding.