When a customer clicks on a product on a list of product search results, it means that this item is better than those not clicked. “Learning how to rank” Models utilize such implicit feedback to improve search results, compare clicks and remove results in either “pairs” (compare peers of results) or list (judge a result position within the list) Mode.

A problem with this approach is the lack of Absolute feedback. For example, if the choices in the selection are not clicked, it is a signal that none of the results were useful. But without the click on topics for comparison, learning-to-trip models can’t do anything with this information. Similarly, if a customer clicks on all the elements on a list, it could indicate that all the results were useful – but it could also indicate a fruitless search to find another useful result. Again, learning-to-rang models cannot tell the different ones.

In a paper we present at this year’s international conference on knowledge discovery and data mining (KDD), we describe a new approach to a rancho that factors in absolute feedback. It also uses the type of transformation models that are so popular in natural language treatment to expect For differences between items on the same list to predict their relative probability of being clicked on.

In experiment, we compared to the approach to a standard neural network model and to a model that used gradient-boosted decision trees (GBDTs), which has historically transitioned neural models on learning to link. On three public data sets, the GBDTs came out on top, although our model was better than the baseline neural model.

We have a large set of internal Amazon search data, but our approach surpassed the baselines everywhere. We assume that this is because the public data sets contain only simple functions and that neural rankers are only advanced when the data set is large and has a large number of features with complicated distribution.

Absolute feedback

Although the goods in the public data sets score according to how well they match different search queries, we are mainly interested in learning from implicit feedback as it scales much better than learning from labeled data.

We thus assign each element of our dataset a value of 0, if not clicked, a value of 1, if it is clicked, and a value of 2 if purchased. We define the absolute value of a list of elements such as the value of its single-value member assuming that the purpose of a product request is to identify a single item for purchase. Thus, a list of an item that results in a purchase has a high value than a list of whose goods were clicked without purchase.

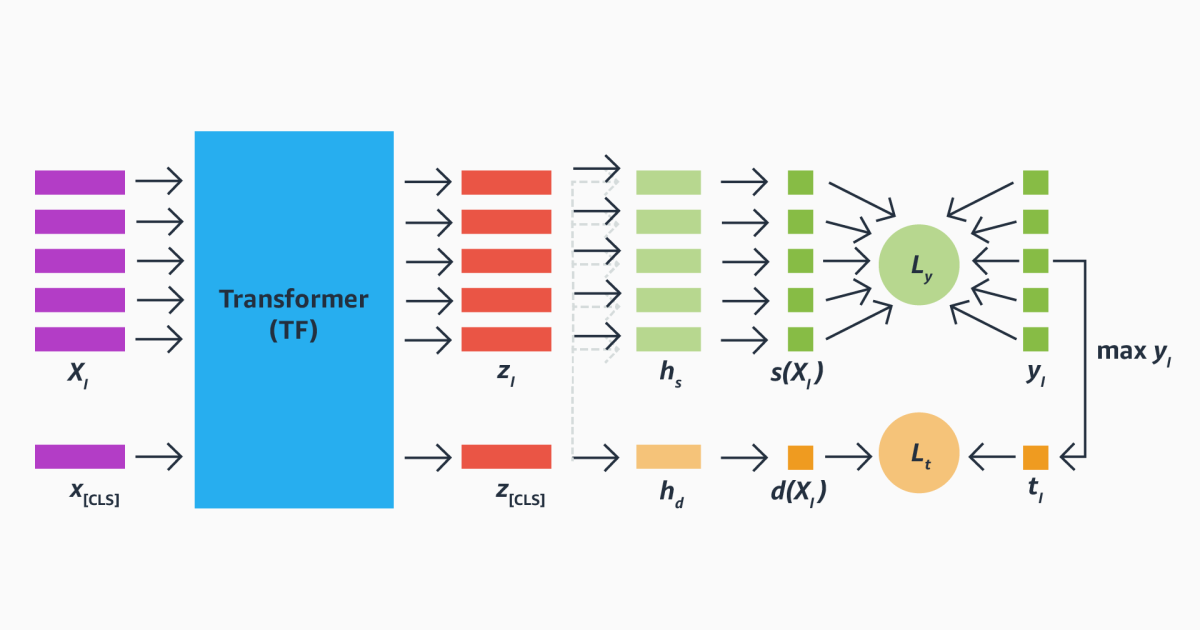

As input receives the model information about each product on a list of products, but it also receives one class Token. For each input, it generates a vector representation: The representations of the products capture information that are useful for wraping how well they match a product request, but the representation of the class tale captures information about the list as a whole.

The representations go to a set of scoring heads that scores them according to their lift to the current query. During training, however, the scoring of the class tale and product results is optimized according to separate loss functions.

Transform’s main design function is its Attention MéchanismWho learns how Heavoy to weight various input functions according to context. In our setting, the attention mechanism determines which product functions are of special imports that receive a product request.

As an example, Transformers is able to learn that a $ 10 item must be accounted for differently on a list of $ 20 items than on a list of five dollars. Like the scoring of the class wise, this contextualization is trained on the overall, absolute feedback, which allows our model to learn from lists that do not generate clicks or purchases.

Does it help?

Although our results on proprietary data we are more impressive, we assessed our proposals for publicly available data sets so that the research community can verify our results. On the internal data of Amazon Search, where a richer set of features is available, our model achieves better performance than the other methods, including strong GBDT models.

Because of these results, we are encouraged to continue to learn from customer feedback. The user’s perspective is central to ranking problems, and clicks and purchase data appear to be a signal mature for further research.